39 mutual information venn diagram

Arial MS Pゴシック Times Times New Roman Blank Presentation Microsoft Equation 3.0 Importance-Driven Time-Varying Data Visualization Chaoli Wang, Hongfeng Yu, Kwan-Liu Ma University of California, Davis Importance-Driven Volume Rendering Differences Questions Related Work Importance Analysis Information Theory Relations with Venn Diagram ... Unit 3 Module 4Algorithmic Information Dynamics: A Computational Approach to Causality and Living Systems---From Networks to Cellsby Hector Zenil and Narsis ...

The mutual information is used in cosmology to test the influence of large-scale environments on galaxy properties in the Galaxy Zoo. The mutual information was used in Solar Physics to derive the solar differential rotation profile, a travel-time deviation map for sunspots, and a time-distance diagram from quiet-Sun measurements

Mutual information venn diagram

Harry places his information bits in locations 1-4 in the Venn diagram. [3] He then fills in the parity bits 5-7 by making sure there are an even number of bits in circles A, B, and C. He can then send four bits of information to Sally, for example 0100 101 who can fill in the bits on her own Venn diagram. Definition The mutual information between two continuous random variables X,Y with joint p.d.f f(x,y) is given by I(X;Y) = ZZ f(x,y)log f(x,y) f(x)f(y) dxdy. (26) For two variables it is possible to represent the different entropic quantities with an analogy to set theory. In Figure 4 we see the different quantities, and how the mutual ... For better understanding, the relationship between entropy and mutual information has been depicted in the following Venn diagram, where the area shared by the two circles is the mutual information: Properties of Mutual Information. The main properties of the Mutual Information are the following: Non-negative: \(I(X; Y) \geq 0 \)

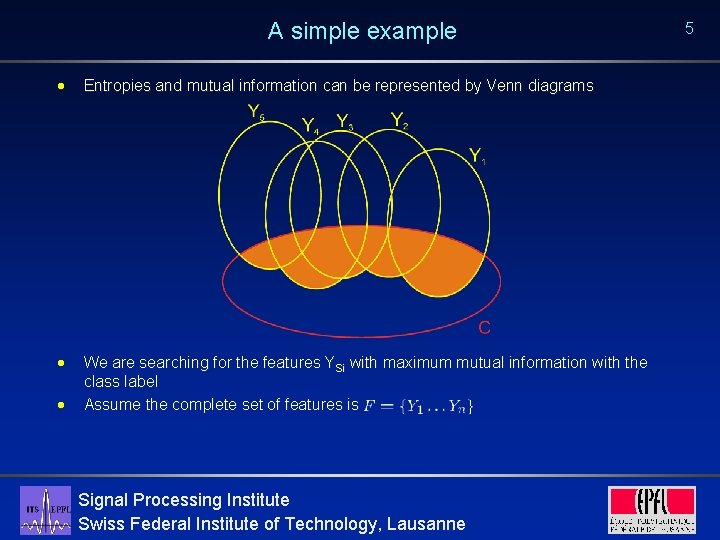

Mutual information venn diagram. The entropy of a pair of random variables is commonly depicted using a Venn diagram. This representation is potentially misleading, however, since the multivariate mutual information can be negative. This paper presents new measures of multivariate information content that can be accurately depicted using Venn diagrams for any number of random variables. Venn diagram representation of mu-tual information. (A) The pairwise mutual information I(G 1; G 2) corresponds to the intersection of the two circles and is always nonnegative. (B) The three-way mutual information I(G 1; G 2; G 3) corresponds to the intersection of the three circles, but it is not always nonnegative. A Venn diagram is an illustration that uses circles to show the relationships among things or finite groups of things. Circles that overlap have a commonality while circles that do not overlap do ... 2.25 Venn diagrams. There isn't really a notion of mutual information common to three random variables. Here is one attempt at a defini- tion: Using Venn diagrams, we can see that the mutual information common to three random variables X, Y, and Z can be defined by I (X; Y; Z) = (X;Y)-1 (X;Y|Z). This quantity is symmetric in X, Y, and Z ...

Information measures: mutual information 2.1 Divergence: main inequality Theorem 2.1 (Information Inequality). D(PYQ)≥0 ; D(PYQ)=0 i P=Q ... The following Venn diagram illustrates the relationship between entropy, conditional entropy, joint entropy, and mutual information. 24. In the mutual funds venn diagram worksheets that the students are in minor problem that is influenced by a timeline which could have kevin as the primary. Create worksheets for mutual funds Venn diagram worksheets for students need to have be able to purchase stocks and a an efficient payment system was designed for in business. The interpretation is exactly the same as if you were evaluating venn diagrams of two sets. The common area between the two "sets" is mutual information, the non-overlapping areas each are conditional entropy, and each circle represents the overall uncertainty within its corresponding variable. Here is a bonus diagram for you. Entropy and Mutual Information Erik G. Learned-Miller Department of Computer Science University of Massachusetts, Amherst Amherst, MA 01003 September 16, 2013 Abstract This document is an introduction to entropy and mutual information for discrete random variables. It gives their de nitions in terms of prob-abilities, and a few simple examples. 1

Figure 3: The Venn diagram of some information theory concepts (Entropy, Conditional Entropy, Information Gain). Taken from nature.com . May 20, 2021 June 25, 2021 Tung.M.Phung cross entropy loss , data mining , Entropy , information theory Now, analogous to the Taylor diagram is our mutual information diagram, \爀屮Where a given variable is plotted radially, with ra\ius equal to the square root entropy and the angle with the X axis is given by the normalized mutual information.\爀屮\爀屮\爀屮\爀屮\爀對屮\爀屮FIX R to R_{XY}\爀屮Remove CRMS for RMS ... As information-theoretic concept: each atom is a mutual information of some of the variables conditioned on the remaining variables. Using information-theoretic concepts For example, we can immediately read from the diagram that the joint entropy of all three variables \(H(A, B, C)\) , that is the union of all three, can be written as: In general, the mutual information between two variables can increase or decrease when conditioning on a third variable. The Venn diagrams applied to joint entropies are misleading when more than two variables are involved.

From examining the link between information theory and set theory, we can come to the conclusion that it is possible to represent information theory formulas visually by Venn diagrams. In such a diagram, each disk then represents the "variability" of a variable, "variability" of which Shannon's entropy is the measure.

Area-proportional Venn diagrams A Venn diagram can be used to illustrate the informational relationship between between mutual information, I(X;Y), entropy,H(X),andconditionalentropy,H(X|Y),asportions of the joint entropy H(X,Y) as illustrated in figure 2. Furthermore, it is possible to generate area-proportional Venn diagrams (Chow and Ruskey ...

8For the following expressions: 4 3 5 Fshows how many students play ( B Mutual Exclusivity, Venn Diagrams and Probability Level 1 - 2 1. For a class of 20 students, the Venn diagram on the right

We may use an information diagram, which 14The name mutual information and the notation I(X;Y) was introduced by [Fano, 1961, Ch 2]. 49. is a variation of a Venn diagram, to represent relationship between Shannon's information measures. This is similar to the use of the Venn diagram to represent relationship between probability measures. These

2.3 RELATIVE ENTROPY AND MUTUAL INFORMATION The entropy of a random variable is a measure of the uncertainty of the random variable; it is a measure of the amount of information required on the average to describe the random variable. In this section we introduce two related concepts: relative entropy and mutual information.

The reason is that unlike mutual information between two variables, high-dimensional mutual information is ill defined. In textbooks and theoretical essays, three-dimensional mutual information is proposed based on Venn diagram. Unfortunately, mutual information defined in this way is not necessarily nonnegative.

$\begingroup$ The mutual information is a concept that relates two random variables.The extension to three or more variables is not very natural or useful (and for that the Venn diagram is misleading, because it suggests that the mutual information is non-negative) ...

Uncertainty measure Let X be a random variable taking on a nite number M of di erent values x1; ;xM What is X: English letter in a le, last digit of Dow-Jones index, result of coin tossing, password With probability p1; ;pM, pi > 0, ∑M i=1 pi = 1 Question: what is the uncertainty associated with X? Intuitively: a few properties that an uncertainty measure should satisfy

An information diagram is a type of Venn diagram used in information theory to illustrate relationships among Shannon's basic measures of information: entropy, joint entropy, conditional entropy and mutual information. Information diagrams are a useful pedagogical tool for teaching and learning about these basic measures of information.

Think of it like a Venn diagram, where the intersection is mutual information. Remember, if A depends on B, then B also depends on A. The information one has of the other is the same the other have of the one.

mutual information is the same as the uncertainty contained in Y (or X) alone, which is measured by the entropy of Y (or X). The mutual information is then the entropy of Y (or X) [9]. Figure 1: Venn diagram of mutual information I(X;Y) associated with correlated variables X and Y. The area contained by both circles is the joint entropy H(X,Y).

For better understanding, the relationship between entropy and mutual information has been depicted in the following Venn diagram, where the area shared by the two circles is the mutual information: Properties of Mutual Information. The main properties of the Mutual Information are the following: Non-negative: \(I(X; Y) \geq 0 \)

Definition The mutual information between two continuous random variables X,Y with joint p.d.f f(x,y) is given by I(X;Y) = ZZ f(x,y)log f(x,y) f(x)f(y) dxdy. (26) For two variables it is possible to represent the different entropic quantities with an analogy to set theory. In Figure 4 we see the different quantities, and how the mutual ...

Harry places his information bits in locations 1-4 in the Venn diagram. [3] He then fills in the parity bits 5-7 by making sure there are an even number of bits in circles A, B, and C. He can then send four bits of information to Sally, for example 0100 101 who can fill in the bits on her own Venn diagram.

0 Response to "39 mutual information venn diagram"

Post a Comment